Why do we need SLURM?

SLURM (Simple Linux Utility for Resource Management) is an open-source workload manager designed for Linux clusters. It provides job scheduling and management, resource allocation, and monitoring for computational tasks across multiple nodes.

--> SLURM helps to manage the resources of compute nodes of a cluster and takes care of a "fair share" job scheduling for all cluster users

General link for documentation: https://slurm.schedmd.com/documentation.html

Basic commands/concepts

SLURM Partitions (Queues)/ sinfo

A partition, sometimes referred to as a "queue," defines a subset of compute nodes that "belong" to this partition and obey certain restrictions.

To see all available partitions and the restrictions, do sinfo on the cluster.

Example on Merlin:

CLUSTER: gmerlin6

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

gwendolen up 2:00:00 1 idle merlin-g-100

gwendolen-long up 8:00:00 1 idle merlin-g-100

gpu* up 7-00:00:00 6 mix merlin-g-[002,004,006,010,013-014]

gpu* up 7-00:00:00 7 alloc merlin-g-[003,005,007,009,011-012,015]

gpu* up 7-00:00:00 1 idle merlin-g-008

gpu-short up 2:00:00 6 mix merlin-g-[002,004,006,010,013-014]

gpu-short up 2:00:00 7 alloc merlin-g-[003,005,007,009,011-012,015]

gpu-short up 2:00:00 2 idle merlin-g-[001,008]

Monitoring current(past) jobs/ squeue

To check how "busy" the the cluster currently is, do:

squeue : shows all jobs

squeue -u $USER only your jobs

squeue --cluster=gmerlin6 shows all jobs of gmerlin6

scontrol show job <job_id> --cluster=gmerlin6 shows details for this specific job on this cluster

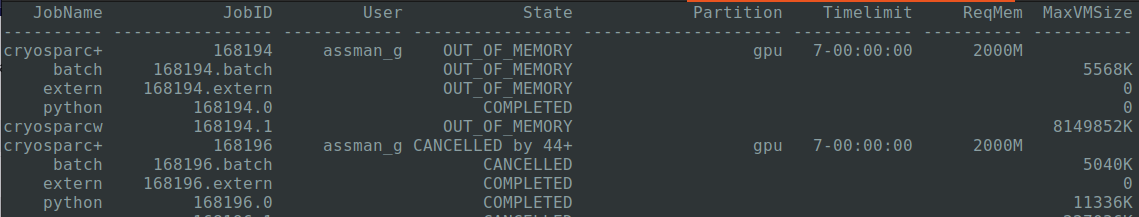

sacct -M gmerlin6 --format="JobName,JobID%16,User%12,State%16,partition%20,time%12,ReqMem,MaxVMSize" -u $USER -S 2024-05-01 shows the submitted jobs of the past since a specific timepoint

Find more Variables to be displayed at: https://slurm.schedmd.com/sacct.html

Submitting jobs

General submission command:

sbatch myscript.sh submit a script

Simple example script:

#!/bin/bash

#SBATCH --cluster=merlin6

#SBATCH --partition=hourly

#SBATCH --nodes=1

#SBATCH --job-name="test"

echo "hello"

sleep 1m

This script submits a job to the hourly partition on the merlin6 cluster. It prints out "hello" and sleeps for 1m (does nothing). Important: SLURM parameters can also be provided directly in the command-line. They will overwrite the SLURM params given in the script!Example:

sbatch --job-name="bla" myscript.sh will override the parameter #SBATCH --job-name="test" in the script.

Some important options:

#SBATCH --mem=<size[units]> : memory

#SBATCH --mem-per-cpu=<size[units]> : memory per CPU

#SBATCH --cpus-per-task=<ncpus> : cpus per task

#SBATCH --gpus=[<type>:]<number> : GPUs

#SBATCH --contraint=<Feature> : specific type of GPU

Example from Relion:

#SBATCH --job-name=r401profile-4gpu

#SBATCH --partition=gpu

#SBATCH --clusters=gmerlin6

#SBATCH --gpus=4

#SBATCH --nodes=1

#SBATCH --mem-per-gpu 25600

#SBATCH --ntasks=5

#SBATCH --cpus-per-task=4

#SBATCH --error=Refine3D/job038/run.err

#SBATCH --output=Refine3D/job038/run.out

#SBATCH --open-mode=append

#SBATCH --time=1-00:00:00

Cancelling jobs

scancel <jobid> --cluser=<cluster_name>

Priority/Queuing of JOBs

SLURM works with a "fair share" concept, which means that all users should get roughly the same amount of computational resources over time. To guarantee that, a submitted job will get a "priority (number)" assigned that ranks the job in the order of being actually started on the cluster. Depending on different parameters: Age, Partition , JobSize etc.

Example: Users who have consumed fewer resources recently will have their jobs prioritized higher, while those who have used more will have lower priority, promoting balanced and fair resource distribution.

Some important info/examples:

- If you request 7 days for a job (maximum time), the JobSize parameter will become larger, meaning your jobs will have a lower priority --> try to request only the time that you will most likely need (plus a bit of backup time)

- If you know that your job runs in <= one hour, go for the hourly / gpu-short partitions

- Generally speaking: Try to minimize your request in terms of resources to maximize your priority!

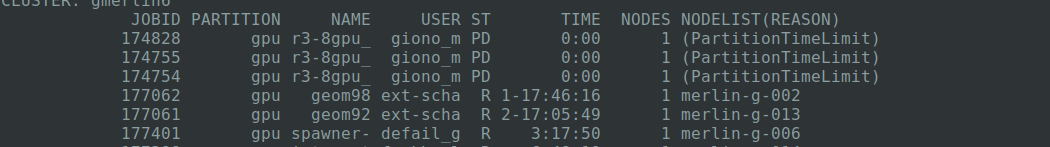

- If a job is queuing for an (unexpected) longer time, check the reason why the job is queuing (NODELIST(REASON)) from the

squeuecommand. Adjust your job accordingly, if possible, or ask the admins to have a look if you are not sure about the issue.

In this case a "wrong" time limit was requested for the partition- which can be fixed.