Page:

BIO applications

Clone

18

BIO applications

assman_g edited this page 2024-06-24 08:46:50 +02:00

Relion

Tips & Tricks & Comments

- can be loaded with module load relion

- go through the tutorial provided by relion

- default scripts requesting different types of hardware

- on the BIO page, recommendations for different Job Types are given

- make sure to provide "Extra Slurm Arguments" if you want to change parameters from the default scripts: (

--time) for instance - think about your SLURM priority) - If you get OOM errors ( Out of memory error), this can be due to "general" memory and GPU card "memory" issues (the latter usually mentions "cuda" in the error). You can either increase the memory (--mem=XXX) or you can specifically go for the largest GPU card (merlin-g-015, A500 GPU card with 24GB memory) by using the option

--constraint=gpumem_24gb. - Debugging: In case of errors, go to the folder and check your scripts, the error messages and log files!

Cryosparc

Cryosparc official documentation

Tips & Tricks & Comments

- follow the installation procedure very carefully, as it is unfortunately not available as module (will probably be a different setup on Merlin7)

- It is also possible to create another "user" from a running cryosparc instance, so not everybody needs to do the full installation

- choose the "project" folders wisely - not in your

homeor/data/user/$USERfolders (not enough disk quota) - the web interface can be run in your local browser if you are on the PSI network (VPN for instance) or with the nomachine browser

- Cryosparc has several lanes (kind of the same as the relion scripts) that have preconfigured SLURM parameters - change those if you want and add your personal lanes! (see BIO documentation)

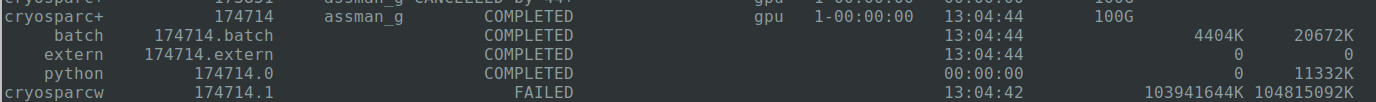

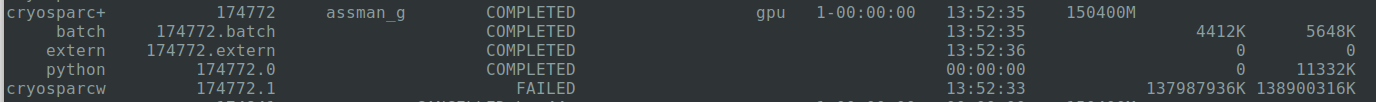

- OOM errors: see relion

- Debugging the cryosparc instance itself: run

cryosparcm statusand check if everything is running properly or check the instance logs in the "Admin Section"

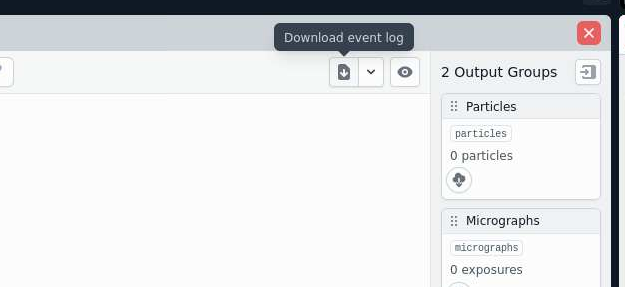

- Debugging Jobs: check the Events Log file in the browser or export the Event Log, especially if you want to provide it to Admins:

Use case:

# Available variables:

# script_path_abs=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/queue_sub_script.sh

# - the absolute path to the generated submission script

# run_cmd=/data/user/benoit_r/cryosparc/cryosparc_worker/bin/cryosparcw run --project P1 --job J8 --master_hostname merlin-l-01.psi.ch --master_command_core_port 39042 > /data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/job.log 2>&1

# - the complete command-line string to run the job

# num_cpu=8

# - the number of CPUs needed

# num_gpu=1

# - the number of GPUs needed. Note: the code will use this many GPUs

# starting from dev id 0. The cluster scheduler or this script have the

# responsibility of setting CUDA_VISIBLE_DEVICES so that the job code

# ends up using the correct cluster-allocated GPUs.

# ram_gb=8.0

# - the amount of RAM needed in GB

# job_dir_abs=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8

# - absolute path to the job directory

# project_dir_abs=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc

# - absolute path to the project dir

# job_log_path_abs=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/job.log

# - absolute path to the log file for the job

# worker_bin_path=/data/user/benoit_r/cryosparc/cryosparc_worker/bin/cryosparcw

# - absolute path to the cryosparc worker command

# run_args=--project P1 --job J8 --master_hostname merlin-l-01.psi.ch --master_command_core_port 39042

# - arguments to be passed to cryosparcw run

# project_uid=P1

# - uid of the project

# job_uid=J8

# - uid of the job

# job_creator=rb

# - name of the user that created the job (may contain spaces)

# cryosparc_username=roger.benoit@psi.ch

# - cryosparc username of the user that created the job (usually an email)

#SBATCH --job-name=cryosparc_P1_J8

#SBATCH --output=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/job.log.out

#SBATCH --error=/data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/job.log.err

#SBATCH --ntasks=1

#SBATCH --threads-per-core=1

#SBATCH --mem-per-cpu=2000M

#SBATCH --time=7-00:00:00

#SBATCH --partition=gpu

#SBATCH --cluster=gmerlin6

#SBATCH --gpus=1

#SBATCH --cpus-per-gpu=4

# Print hostname, for debugging

echo "Job Id: $SLURM_JOBID"

echo "Host: $SLURM_NODELIST"

export SRUN_CPUS_PER_TASK=$((${SLURM_GPUS:-1} * ${SLURM_CPUS_PER_GPU:-1}))

srun /opt/psi/Programming/anaconda/2019.07/conda/bin/python -c 'import os; print(f"CPUs: {os.sched_getaffinity(0)}")'

# Make sure this matches the version of cuda used to compile cryosparc

module purge

srun /data/user/benoit_r/cryosparc/cryosparc_worker/bin/cryosparcw run --project P1 --job J8 --master_hostname merlin-l-01.psi.ch --master_command_core_port 39042 > /data/project/bio/benoit/Roger/2024_pRB22_b/20201023_pRB22_b/CS-prb22-b-cryosparc/J8/job.log 2>&1

EXIT_CODE=$?

echo "Exit code: $EXIT_CODE"

exit $?

Alphafold

Tips & Tricks & Comments

- The latest Alphafold (3.0) is not available open-source, which is why it is not installed on Merlin, yet. Instead, the latest open-source (2.3.2) is installed as p-module

- For Alphafold, it is crucial to pick the correct GPUs depending on the AA size. Some recommendations are described in the BIO Alphafold documentation

- Merlin6 only provides a A500 GPU (24GB mem, max 7 days), which is only sufficient for ~2000AA sequence length. If you want to go bigger, you have to run on RA (40GB mem, max 7 days). Merlin7 will provide larger GPUs, so large complexes can be run on Merlin7 as well