3.9 KiB

TODO

-

[from Leïla] Before Step 5. The file should contain only data of the current year.

-

[from Leïla] Ebas converter. Change "9999.999" to "9999999.9999" in header

-

[from Leïla] Ebas converter. Update Detection limit values in L2 header: take the ones (1h) from limits_of_detection.yaml

-

[from Leïla] Ebas converter. Correct errors (uncertainties) that they can't be lower than 0.0001

-

[from Leïla] Flag using validity threshold. Change flow rate values to 10% of flow rate ref.

-

[from Leïla] In Step 1. Creation of a new collection should be an option and not automatic.

-

[from Leïla] The data chain (except Step 5) should also include Org_mx and Ord_err. To discuss together.

-

[from Leïla] Step 4.1. Add a step to verify ACSM data with external instruments (MPSS, eBC). To discuss together.

-

[New] Create data flows to validate and replace existing data chain params. data/campaignData/param/ -> pipelines/params and campaignDescriptor.yaml -> acsm data converter.

-

[New] DIMA. Add dictionaries to explain variables at different levels.

-

[New] DIMA. Modify data integration pipeline to not add current datetime in filename when not specified.

-

[New] DIMA. Set data/ folder as default and only possible output folder

-

[New] DIMA. Review DataManager File Update. Generated CSV files are not transfering properly.

-

[New] DIMA. Ensure code snippets that open and close HDF5 files do so securly and do not leave the file locked

-

[New] DIMA. Revise default csv file reader and enhance it to infer datetime column and format.

-

[New] Learn how to run docker-compose.yaml

-

[New] EBAS_SUBMISSION_STEP. Extend time filter to ranges, create a merge data frame function, and construct file name and output dir dynammically. It is currently hardcoded.

-

[New] Finish command line interface for visualize_datatable_vars and add modes, --flags, --dataset, and save to figures folder in repo

-

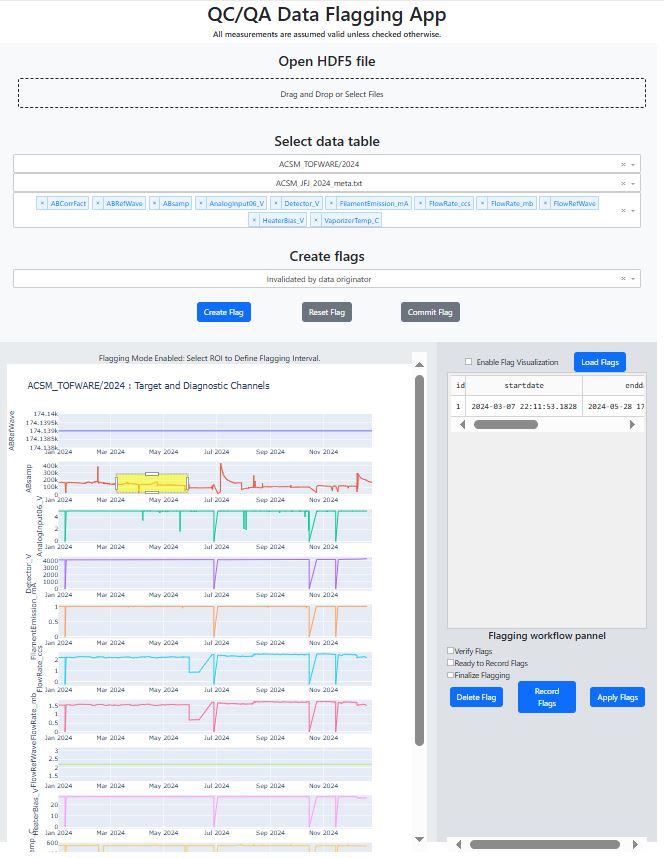

Implement flagging-app specific data operations such as:

- [New item] When verify flags from checklist is active, enable delete-flag button to delete flag associated with active cell on table.

- [New item] When verify and ready to trasnfer items on checklist are active, enable record-flags button to record verified flags into the HDF5 file.

- [New item] When all checklist items active, enable apply button to apply flags to the time series data and save it to the HDF5 file.

Define data manager obj with apply flags behavior.- Define metadata answering who did the flagging and quality assurance tests?

- Update intruments/dictionaries/ACSM_TOFWARE_flags.yaml and instruments/readers/flag_reader.py to describe metadata elements based on dictionary.

Update DIMA data integration pipeline to allowed user-defined file naming templateDesign and implement flag visualization feature: click flag on table and display on figure shaded region when feature is enabled- Implement schema validator on yaml/json representation of hdf5 metadata

- Implement updates to 'actris level' and 'processing_script' after operation applied to data/file?

-

WhenCreate Flagis clicked, modify the title to indicate that we are in flagging mode and ROIs can be drawn by dragging. -

UpdateCommit Flaglogic:3. Update recorded flags directory, and add provenance information to each flag (which instrument and channel belongs to). -

Record collected flag information initially in a YAML or JSON file. Is this faster than writing directly to the HDF5 file?

-

Should we actively transfer collected flags by clicking a button? after commit button is pressed, each flag is now stored in an independent json file

-

Enable some form of chunk storage and visualization from the HDF5 file. Iterate over chunks for faster display versus access time.

- Do I need to modify DIMA?

- What is a good chunk size?

- What Dash component can we use to iterate over the chunks?