2.2 KiB

Introduction

The Merlin local HPC cluster

Historically, the local HPC clusters at PSI were named Merlin. Over the years, multiple generations of Merlin have been deployed.

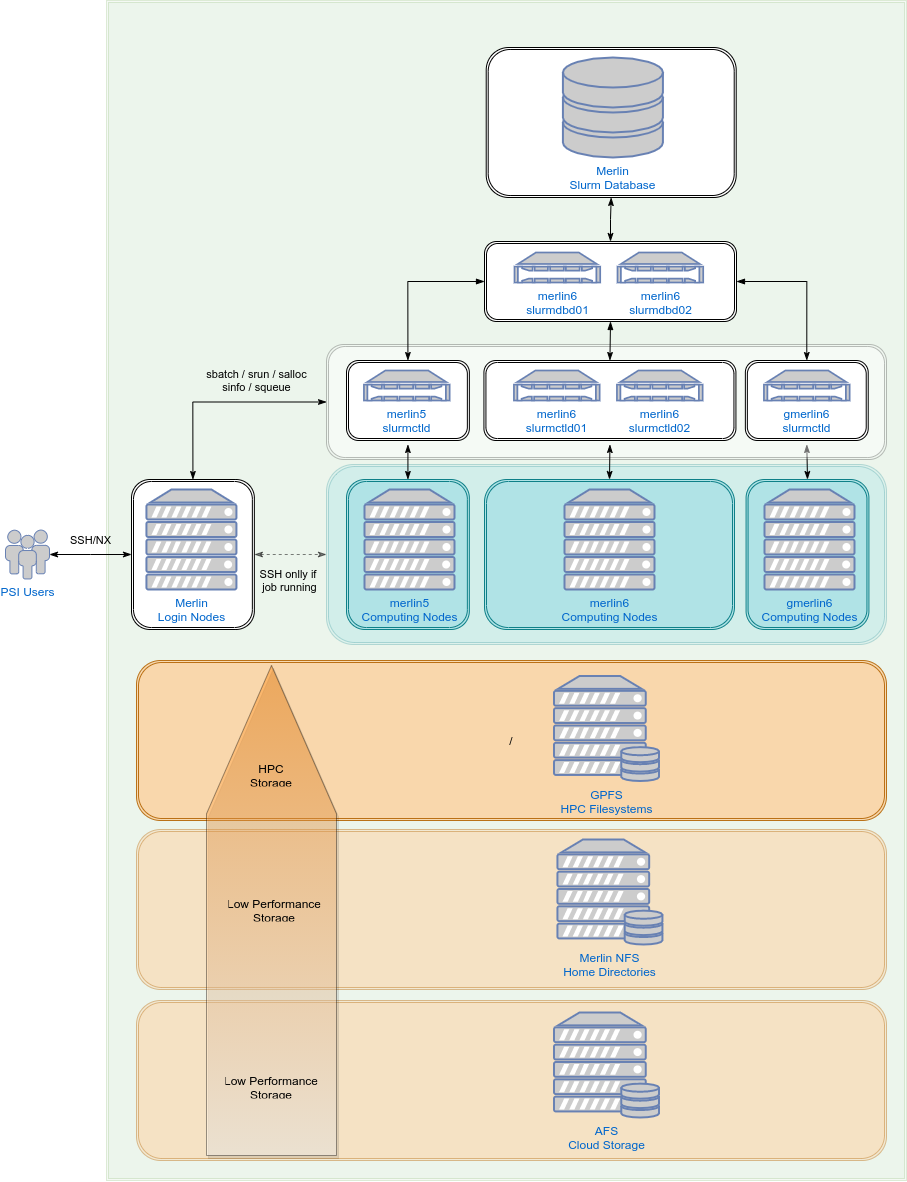

Access to the different Slurm clusters is possible from the Merlin login nodes, which can be accessed through the SSH protocol or the NoMachine (NX) service.

The following image shows the Slurm architecture design for the Merlin5 & Merlin6 (CPU & GPU) clusters:

Merlin6

Merlin6 is a the official PSI Local HPC cluster for development and mission-critical applications that has been built in 2019. It replaces the Merlin5 cluster.

Merlin6 is designed to be extensible, so is technically possible to add more compute nodes and cluster storage without significant increase of the costs of the manpower and the operations.

Merlin6 contains all the main services needed for running cluster, including login nodes, storage, computing nodes and other subservices, connected to the central PSI IT infrastructure.

CPU and GPU Slurm clusters

The Merlin6 computing nodes are mostly based on CPU resources. However,

in the past it also contained a small amount of GPU-based resources, which were mostly used

by the BIO Division and by Deep Leaning projects. Today, only Gwendolen is available on gmerlin6.

These computational resources are split into two different Slurm clusters:

- The Merlin6 CPU nodes are in a dedicated Slurm cluster called

merlin6.- This is the default Slurm cluster configured in the login nodes: any job submitted without the option

--clusterwill be submited to this cluster.

- This is the default Slurm cluster configured in the login nodes: any job submitted without the option

- The Merlin6 GPU resources are in a dedicated Slurm cluster called

gmerlin6.- Users submitting to the

gmerlin6GPU cluster need to specify the option--cluster=gmerlin6.

- Users submitting to the